Content available at: Indonesia (Indonesian) Melayu (Malay) ไทย (Thai) Tiếng Việt (Vietnamese) Philipino

BACKGROUND ON POULTRY WELFARE

Poultry production plays a critical role in feeding the increasing world’s population with affordable protein (i.e., chicken and eggs). The United States is currently the world’s largest broiler producer and 2nd largest egg producer due to continuous innovation in animal breeding, nutrition management, environmental control, and disease prevention, etc.

However, US poultry and egg farms are facing several production challenges such as animal welfare concerns.

- For instance, the fast growing broiler chickens were reported with leg issues or lameness.

- The caged egg production systems were pushed to shift for cage-free operation, which cannot guarantee a better hen welfare due to high mortality, injury rate, and poor air quality.

To address those issues, researchers at the University of Georgia (UGA, Dr. Lilong Chai’s precision poultry farming lab) developed several precision farming technologies for monitoring welfare and behaviors of broilers and cage-free layers.

BROILERS’ FLOOR DISTRIBUTION AND BEHAVIORS

The spatial distribution of broiler chickens is an indication of a healthy flock or not. Routine inspections of broiler chickens’ floor distribution are done manually in commercial houses daily or multiple times a day, which is labor intensive, time consuming, and subject to farm staff’s errors.

- This task requires a precision system that can monitor the chicken’s floor distributions automatically.

- A machine vision-based method was developed and tested in an experimental broiler house at UGA. To track individual birds’ distribution, the pen floor was virtually defined/divided into drinking, feeding, and rest/exercise zones (Figure 1).

- About 7000 chicken areas/profiles were used to build a neural network model (BP – backward propagation) for floor distribution analysis.

- The results showed that the identification accuracies of bird distribution in the drinking and feeding zones were 0.9419 and 0.9544, respectively.

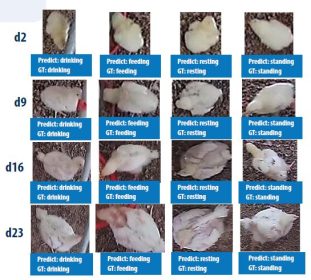

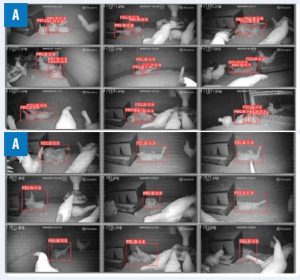

- The team further innovated CNN (convolutional neural network) based deep learning models to detect birds’ behaviors of feeding, drinking, resting, and standing at different ages (Figure 2 & Figure 3).

- After image augmentation processing, over 10,000 images were generated for each day and the model reached the accuracy rates of 88.5%, 97%, 94.5%, and 90% when birds were 2, 9, 16 and 23 days old, respectively, in detecting broiler chickens on the floor (Figure 4).

Figure 1. A top view of a pen and zone definition. The redbox (1) drinking zone; (2) feeding zone; and (3) resting/exercise zone.

Figure 1. A top view of a pen and zone definition. The redbox (1) drinking zone; (2) feeding zone; and (3) resting/exercise zone.

Figure 4. Broilers detection on the floor.

Figure 4. Broilers detection on the floor.

CAGE FREE LAYERS’ PECKING, MISLAYING, AND DISTRIBUTION

Major restaurants and grocery chains in the United States have pledged to buy cage-free (CF) eggs only by 2025 or 2030.

- While CF house allows hens to perform more natural behaviors (e.g., dust bathing, perching, and foraging on the litter floor), there are some particular challenges for cage-free systems such as high mortality and injury rate and floor eggs.

- Pecking is one of the primary welfare issues in commercial cage-free hen houses as that can seriously reduce the well-being of birds and cause economic losses for egg producers.

- After beak trimming is highly criticized in Europe and the USA, alternative methods are needed for pecking, monitoring and management.

- A possibility for minimizing the problem is early detection of pecking behaviors and damages to prevent it from spreading or increasing as feather pecking is a learned behavior.

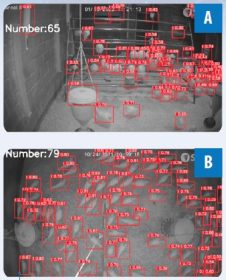

Machine vision methods were developed and tested in tracking chickens’ floor and spatial distribution (Figure 5 and Figure 6), and identifying pecking behaviors of hens and potential damages (Figure 7 and Figure 8) in research cage-free facilities at UGA. The YOLOv5x-pecking model was tested with a precision of 88.3% in tracking pecking.

Figure 7. Pecking behavior and damages in layers.

Figure 7. Pecking behavior and damages in layers.

Figure 8. Performance of YOLOv5-pecking deep learning model in pecking detection: a – pecking in a rest zone, b – pecking in a feeding zone, c –pecking in a drinking zone; d – two birds are pecking one bird (i.e., the same bird in c was pecked by the two birds at the same time).

Figure 8. Performance of YOLOv5-pecking deep learning model in pecking detection: a – pecking in a rest zone, b – pecking in a feeding zone, c –pecking in a drinking zone; d – two birds are pecking one bird (i.e., the same bird in c was pecked by the two birds at the same time).

In addition, about 5400 images were collected and used to train another deep learning model (i.e., YOLOv5m-FELB – floor egg laying behavior), which reached 90% of precision (Figure 9).

Besides, the method could also be used to detect or scan floor eggs (Figure 10).

SUMMARY

Different machine vision or deep learning methods were developed at the University of Georgia’s poultry science department to monitor broiler and cage-free layers’ welfare and behaviors.

Those findings provide references for developing precision poultry farming systems on commercial broiler and egg farms to address

poultry production, welfare, and health associated issues. Dr. Lilong Chai’s projects were sponsored by USDA-NIFA, USDA ARS, Egg Industry Center, Georgia Research Alliance, UGA, Oracle, and poultry companies, etc.

Bibliography available upon request