Content available at: Indonesia (Indonesian) Melayu (Malay) ไทย (Thai) Tiếng Việt (Vietnamese) Philipino

INTRODUCTION

- About 20,000-30,000 birds are raised in commercial broiler houses in the US today, and it has caused growing public concerns about animal welfare.

- Daily evaluation of broiler well-being and growth, which is labor intensive and subject to human errors, is conducted manually. Therefore, there is a need for an automatic tool to detect and analyze the behaviors of chickens and predict their welfare status.

- Deep learning technology has powerful feature representation capabilities, fast processing speed, and can resolve problems associated with external interferences.

- Thus, deep learning algorithms are appropriate models for developing an automatic, efficient, intelligent tool for precision animal farming.

- However, the size of the chicken and the sheer numbers raised in a single house pose challenges in applying deep learning techniques in monitoring individual chickens.

- In the current study, we integrated the convolutional block attention module (CBAM) into YOLOv5 to enhance the algorithm’s ability to extract image features.

METHODS

- This study was conducted in an experimental broiler house at the Poultry Research Center of the University of Georgia, Athens, USA.

- High-definition cameras (PRO-1080MSFB, Swann Communications, Santa Fe Springs, CA) were mounted on the ceiling (2.5 m above floor) to capture video (15 frame/s, 1440 pixels × 1080 pixels).

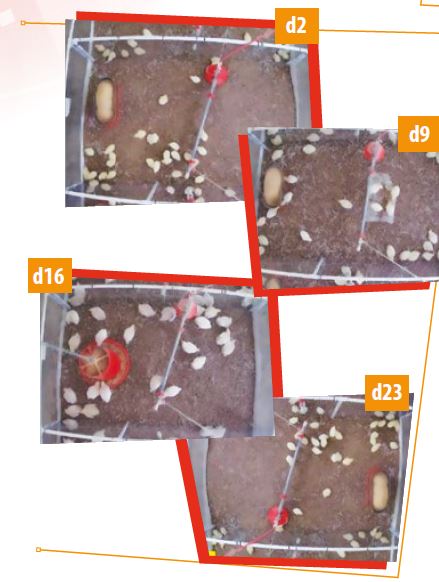

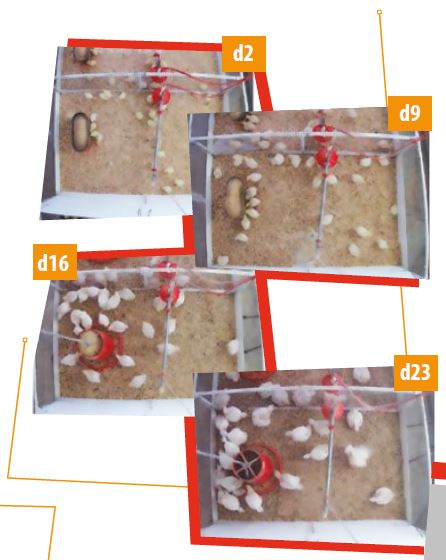

- Two different litter types (fresh pine shavings and reused litter previously used to raise three flocks of broilers) were selected as application scenes for broiler detection. For the two litter scenes, 70 images were selected from d2, d9, d16, and d23, respectively, for 560 images.

In addition, to evaluate the detection performance of the model under multiple pens scenes, the image samples shown in Fig.1c were constructed, in which 70 images were selected for d16 and d23.

- Finally, 700 images were obtained and randomly divided into training and testing sets at a ratio of 5:2.

- Figure 1 shows examples of broiler images from different scenes.

FINDINGS

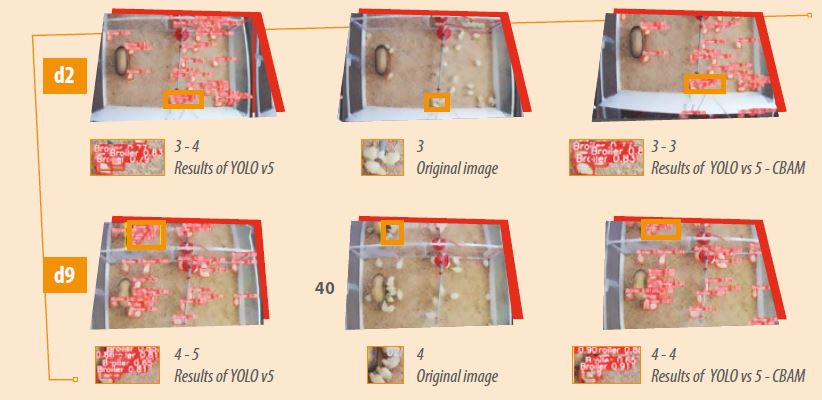

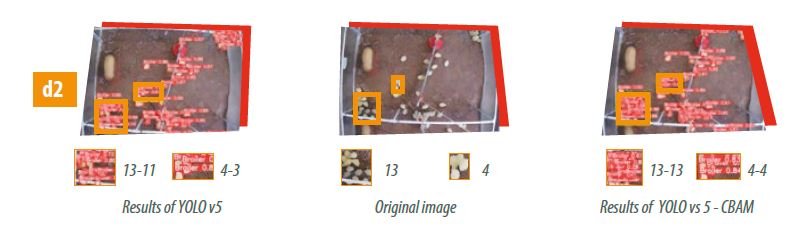

Figures 2 and 3 show the detection results of broilers with YOLOv5 and YOLOv5-CBAM on fresh pine shaving and reused litter floors, respectively.

- The first column is the detection results of YOLOv5, the second column is the original images, and the third column is the detection

results of YOLOv5-CBAM. i is the actual number of broilers, and j is the number of broilers detected broilers. - The YOLOv5-CBAM detected broilers with higher precision than YOLOv5, and in the case of crowded or small targets, it could still provide better detection results. We used datasets consisting of broiler images of different ages, raised on two types of litter and multiple pens to test the applicability and effectiveness of YOLOv5-CBAM.

The precision, recall, F1, and [email protected] of YOLOv5- CBAM were 97.3%, 92.3%, 94.7%, and 96.5%, which was higher than that of YOLOv5 (96.6%, 92.1%, 94.3% and 96.3%), Faster R-CNN (79.7%, 95.4%, 86.8% and 90.6%) and SSD (60.8%, 94.0%, 73.8% and 88.5%).

The results show that the overall performance of the proposed YOLOv5-CBAM was the best.

- Adding the CBAM module to the YOLOv5 network improved the performance of the broiler detection model.

- It also showed that the model YOLOv5-CBAM was suitable for detecting broilers at different growth stages, in different litter types, and in multiple pens.

- In addition, the FPS of YOLOv5-CBAM was 55 Frame/s, which was lower than YOLOv5 (62 Frame/s) but higher than Faster R-CNN (2.6 Frame/s) and SSD (3.1 Frame/s).

SUMMARY

- In this study, we developed a YOLOv5- CBAM-broiler model and tested its performance for tracking broilers on litter floors.

- A complex dataset of broiler chicken images at different ages, multiple pens, and scenes (fresh litter versus reused litter) was constructed to evaluate the effectiveness of the new model.

- The results demonstrate that the proposed approach achieved a precision of 97.3%, which outperformed Faster R-CNN, SSD, and YOLOv5.

- Overall, the proposed deep learning-based broiler detection approach can achieve accurate and fast real-time target detection and provide technical support for managing and monitoring birds in commercial broiler houses.

Further reading: Guo, Y., S. E. Aggrey, X. Yang, A. Oladeinde, Y. Qiao, L. Chai. (2023) Detecting broiler chickens on litter floor with the YOLOv5- CBAM deep learning model. Artificial Intelligence in Agriculture,9: 36-45. https://doi.org/10.1016/j.aiia.2023.08.002.